SASA: Semantics-Augmented Set Abstraction for Point-Based 3D Object Detection

Chen Chen, Zhe Chen, Jing Zhang, Dacheng Tao

[AAAI-22] Main Track

Abstract:

Although point-based networks are demonstrated to be accurate for 3D point cloud modeling, they are still falling behind their voxel-based competitors in 3D detection. We observe that the prevailing set abstraction design for down-sampling points may maintain too much unimportant background information that can affect feature learning for detecting objects. To tackle this issue, we propose a novel set abstraction method named Semantics-Augmented Set Abstraction (SASA). Technically, we first add a binary segmentation module as the side output to help identify foreground points. Based on the estimated point-wise foreground scores, we then propose a semantics-guided point sampling algorithm to help retain more important foreground points during down-sampling. In practice, SASA shows to be effective in identifying valuable points related to foreground objects and improving feature learning for point-based 3D detection. Additionally, it is an easy-to-plug-in module and able to boost various point-based detectors, including single-stage and two-stage ones. Extensive experiments on the popular KITTI and nuScenes datasets validate the superiority of SASA, lifting point-based detection models to reach comparable performance to state-of-the-art voxel-based methods. Code will be available at https://github.com/blakechen97/SASA.

Introduction Video

Sessions where this paper appears

-

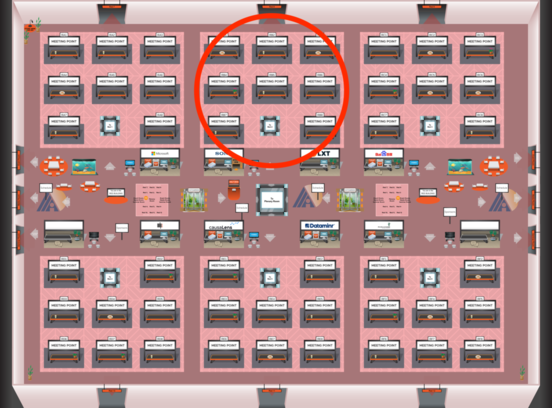

Poster Session 6

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

Red 2

Red 2

-

Poster Session 7

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Red 2

Red 2