Detecting Human-Object Interactions with Object-Guided Cross-Modal Calibrated Semantics

Hangjie Yuan, Mang Wang, Dong Ni, Liangpeng Xu

[AAAI-22] Main Track

Abstract:

Human-Object Interaction (HOI) detection is an essential task to understand human-centric images from a fine-grained perspective. Although end-to-end HOI detection models thrive, their paradigm of parallel human/object detection and verb class prediction loses two-stage methods' merit: object-guided hierarchy. The object in one HOI triplet gives direct clues to the verb to be predicted. In this paper, we aim to boost end-to-end models with object-guided statistical priors. Specifically, We propose to utilize a Verb Semantic Model (VSM) and use semantic aggregation to profit from this object-guided hierarchy. Similarity KL (SKL) loss is proposed to optimize VSM to align with the HOI dataset's priors. To overcome the static semantic embedding problem, we propose to generate cross-modality-aware visual and semantic features by Cross-Modal Calibration (CMC). The above modules combined composes Object-guided Cross-modal Calibration Network (OCN). Experiments conducted on two popular HOI detection benchmarks demonstrate the significance of incorporating the statistical prior knowledge and produce state-of-the-art performances. More detailed analysis indicates proposed modules serve as a stronger verb predictor and a more superior method of utilizing prior knowledge. The codes are available at https://github.com/JacobYuan7/OCN-HOI-Benchmark.

Introduction Video

Sessions where this paper appears

-

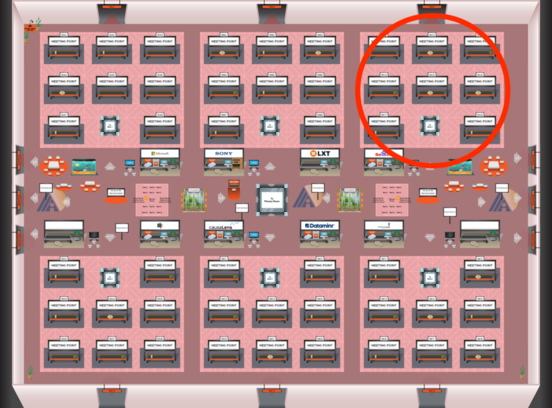

Poster Session 5

Sat, February 26 12:45 AM - 2:30 AM (+00:00)

Sat, February 26 12:45 AM - 2:30 AM (+00:00)

Red 3

Red 3

-

Poster Session 12

Mon, February 28 8:45 AM - 10:30 AM (+00:00)

Mon, February 28 8:45 AM - 10:30 AM (+00:00)

Red 3

Red 3