AlphaHoldem: High-Performance Artificial Intelligence for Heads-Up No-Limit Poker via End-to-End Reinforcement Learning

Enmin Zhao, Renye Yan, Institute of Automation, Chinese Academy of Sciences)Institute of Automation, Chinese Academy of Sciences;School of artificial intelligence, University of Chinese Academy of Sciences)Institute of Automation, Chinese Academy of Sciences)Institute of Automation, Chinese Academy of Sciences)*

[AAAI-22] Main Track

Abstract:

Heads-up no-limit Texas hold'em (HUNL) is the quintessential game with imperfect information. Representative prior works like DeepStack and Libratus heavily rely on counterfactual regret minimization (CFR) and its variants to tackle HUNL. However, the prohibitive computation cost of CFR iteration makes it difficult for subsequent researchers to learn the CFR model in HUNL and apply it in other practical applications. In this work, we present AlphaHoldem, a high-performance and lightweight HUNL AI obtained with an end-to-end self-play reinforcement learning framework. The proposed framework adopts a pseudo-Siamese architecture to directly learn from the input state information to the output actions by competing the learned model with its different historical versions. The main technical contributions include a novel state representation of card and betting information, a multi-task self-play training loss function, and a new model evaluation and selection metric to generate the final model. In a study involving 100,000 hands of poker, AlphaHoldem defeats Slumbot and DeepStack using only one PC with three days training. At the same time, AlphaHoldem only takes four milliseconds for each decision-making using only a single CPU core, more than 1,000 times faster than DeepStack. We will provide an online testing platform of AlphaHoldem to facilitate further studies in this direction.

Introduction Video

Sessions where this paper appears

-

Poster Session 1

Thu, February 24 4:45 PM - 6:30 PM (+00:00)

Thu, February 24 4:45 PM - 6:30 PM (+00:00)

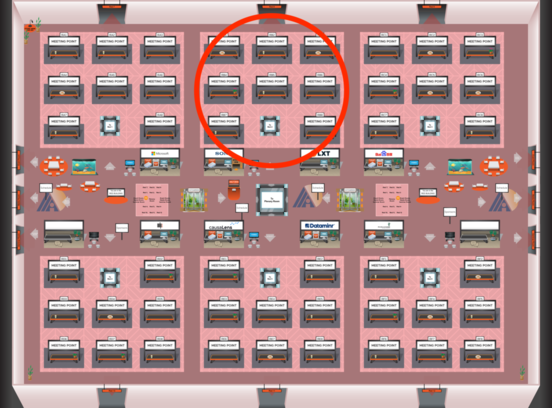

Red 2

Red 2

-

Poster Session 10

Sun, February 27 4:45 PM - 6:30 PM (+00:00)

Sun, February 27 4:45 PM - 6:30 PM (+00:00)

Red 2

Red 2

-

Oral Session 1

Thu, February 24 6:30 PM - 7:45 PM (+00:00)

Thu, February 24 6:30 PM - 7:45 PM (+00:00)

Red 2

Red 2