Self-Supervised Category-Level 6D Object Pose Estimation with Deep Implicit Shape Representation

Wanli Peng, Jianhang Yan, Hongtao Wen, Yi Sun

[AAAI-22] Main Track

Abstract:

Category-level 6D pose estimation can be better generalized to unseen objects in a category compared with instancelevel 6D pose estimation. However, existing category-level 6D pose estimation methods usually require supervised training with a sufficient number of 6D pose annotations of objects which makes them difficult to be applied in real scenarios. To address this problem, we propose a self-supervised framework for category-level 6D pose estimation in this paper.We leverage DeepSDF as a 3D object representation and design several novel loss functions based on DeepSDF to help the self-supervised model predict unseen object poses without any 6D object pose labels and explicit 3D models in real scenarios.Experiments demonstrate that our method achieves comparable performance with the state-of-the-art fully supervised methods on the category-level NOCS benchmark.

Introduction Video

Sessions where this paper appears

-

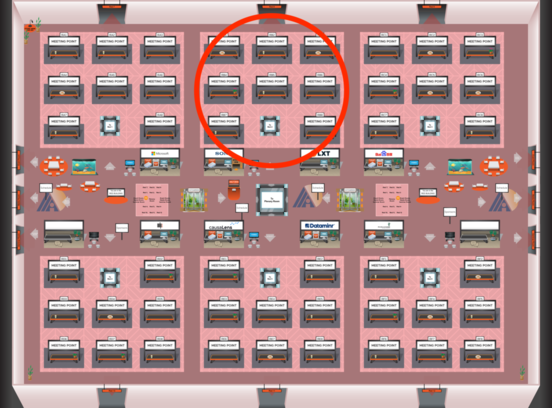

Poster Session 3

Fri, February 25 8:45 AM - 10:30 AM (+00:00)

Fri, February 25 8:45 AM - 10:30 AM (+00:00)

Red 2

Red 2

-

Poster Session 8

Sun, February 27 12:45 AM - 2:30 AM (+00:00)

Sun, February 27 12:45 AM - 2:30 AM (+00:00)

Red 2

Red 2