FInfer: Frame Inference-Based Deepfake Detection for High-Visual-Quality Videos

Juan Hu, Xin Liao, Jinwen Liang, Wenbo Zhou, Zheng Qin

[AAAI-22] Main Track

Abstract:

Deepfake has ignited hot research interests in both academia and industry due to its potential security threats. Many countermeasures have been proposed to mitigate such risks. Current Deepfake detection methods achieve superior performances in dealing with low-visual-quality Deepfake media which can be distinguished by the obvious visual artifacts. However, with the development of deep generative models, the realism of Deepfake media has been significantly improved and becomes tough challenging to current detection models. In this paper, we propose a frame inference-based detection framework (FInfer) to solve the problem of high-visual-quality Deepfake detection. Specifically, we first learn the referenced representations of the current and future frames’ faces. Then, the current frames’ facial representations are utilized to predict the future frames’ facial representations by using an autoregressive model. Finally, a representation-prediction loss is devised to maximize the discriminability of real videos and fake videos. We demonstrate the effectiveness of our FInfer framework through information theory analyses. The entropy and mutual information analyses indicate the correlation between the predicted representations and referenced representations in real videos is higher than that of high-visual-quality Deepfake videos. Extensive experiments demonstrate the performance of our method is promising in terms of in-dataset detection performance, detection efficiency, and cross-dataset detection performance in high-visual-quality Deepfake videos.

Introduction Video

Sessions where this paper appears

-

Poster Session 6

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

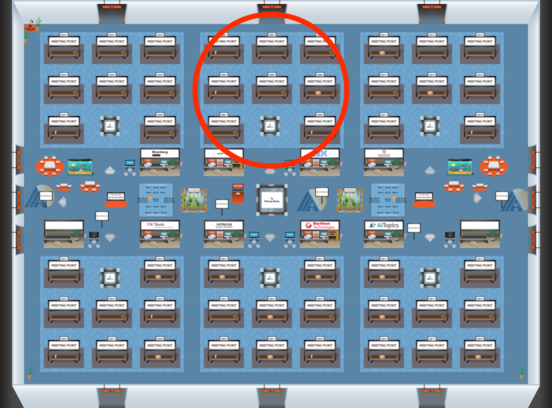

Blue 2

Blue 2

-

Poster Session 12

Mon, February 28 8:45 AM - 10:30 AM (+00:00)

Mon, February 28 8:45 AM - 10:30 AM (+00:00)

Blue 2

Blue 2

-

Oral Session 12

Mon, February 28 10:30 AM - 11:45 AM (+00:00)

Mon, February 28 10:30 AM - 11:45 AM (+00:00)

Blue 2

Blue 2