Towards Fully Sparse Training: Information Restoration with Spatial Similarity

Weixiang Xu, Xiangyu He, Ke Cheng, Peisong Wang, Jian Cheng

[AAAI-22] Main Track

Abstract:

The 2:4 structured sparsity pattern released by NVIDIA Ampere architecture, requiring four consecutive values containing at least two zeros, enables doubling math throughput for matrix multiplications. Recent works mainly focus on inference speedup via 2:4 sparsity while training acceleration has been largely overwhelmed where backpropagation consumes around 70% of the training time. However, unlike inference, training speedup with structured pruning is nontrivial due to the need to maintain the fidelity of gradients and reduce the additional overhead of performing 2:4 sparsity online. For the first time, this article proposes fully sparse training (FST) where `fully' indicates that ALL matrix multiplications in forward/backward propagation are structurally pruned while maintaining accuracy. To this end, we begin with saliency analysis, investigating the sensitivity of different sparse objects to structured pruning. Based on the observation of spatial similarity among activations, we propose pruning activations with fixed 2:4 masks. Moreover, an Information Restoration block is proposed to retrieve the lost information, which can be implemented by efficient gradient-shift operation. Evaluation of accuracy and efficiency shows that we can achieve 2× training acceleration with negligible accuracy degradation on challenging large-scale classification and detection tasks.

Introduction Video

Sessions where this paper appears

-

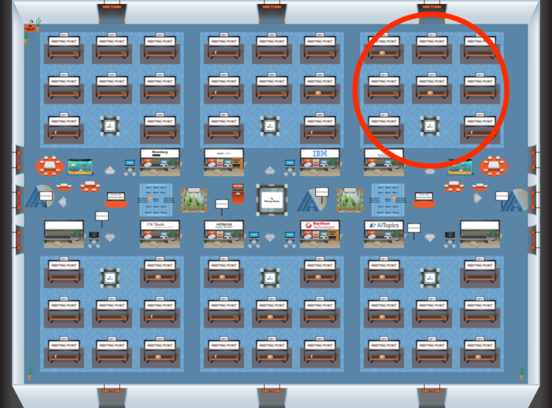

Poster Session 3

Fri, February 25 8:45 AM - 10:30 AM (+00:00)

Fri, February 25 8:45 AM - 10:30 AM (+00:00)

Blue 3

Blue 3

-

Poster Session 8

Sun, February 27 12:45 AM - 2:30 AM (+00:00)

Sun, February 27 12:45 AM - 2:30 AM (+00:00)

Blue 3

Blue 3