Certified Robustness of Nearest Neighbors Against Data Poisoning and Backdoor Attacks

Jinyuan Jia, Yupei Liu, Xiaoyu Cao, Neil Zhenqiang Gong

[AAAI-22] Main Track

Abstract:

Data poisoning and backdoor attacks aim to corrupt a machine learning classifier via modifying, adding, and/or removing some carefully selected training examples, such that the corrupted classifier makes incorrect predictions as the attacker desires. The key idea of state-of-the-art certified defenses against data poisoning and backdoor attacks is to create a \emph{majority vote} mechanism to predict the label of a testing example. Moreover, each voter is a base classifier trained on a subset of the training dataset. Classical simple learning algorithms such as $k$ nearest neighbors (kNN) and radius nearest neighbors (rNN) have intrinsic majority vote mechanisms. In this work, we show that the intrinsic majority vote mechanisms in kNN and rNN already provide certified robustness guarantees against data poisoning and backdoor attacks. Moreover, our evaluation results on MNIST and CIFAR10 show that the intrinsic certified robustness guarantees of kNN and rNN outperform those provided by state-of-the-art certified defenses. Our results serve as standard baselines for future certified defenses against data poisoning and backdoor attacks.

Introduction Video

Sessions where this paper appears

-

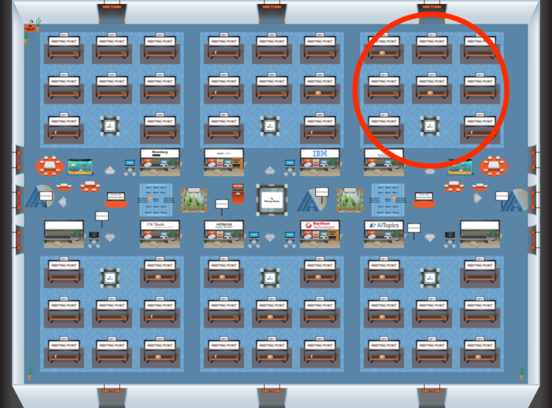

Poster Session 6

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

Blue 3

Blue 3

-

Poster Session 7

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Blue 3

Blue 3