Improving Bayesian Neural Networks by Adversarial Sampling

Jiaru Zhang, Yang Hua, Tao Song, Hao Wang, Zhengui Xue, Ruhui Ma, Haibing Guan

[AAAI-22] Main Track

Abstract:

Bayesian neural networks (BNNs) have drawn extensive interest due to the unique probabilistic representation framework. However, Bayesian neural networks have limited publicized deployments because of the relatively poor model performance in real-world applications. In this paper, we argue that the randomness of sampling in Bayesian neural networks causes errors in the updating of model parameters during training and some sampled models with poor performance in testing. To solve this, we propose to train Bayesian neural networks with Adversarial Distribution as a theoretical solution. To avoid the difficulty of calculating Adversarial Distribution analytically, we further present the Adversarial Sampling method as an approximation in practice. We conduct extensive experiments with multiple network structures on different datasets, e.g., CIFAR-10 and CIFAR-100. Experimental results validate the correctness of the theoretical analysis and the effectiveness of the Adversarial Sampling on improving model performance. Additionally, models trained with Adversarial Sampling still keep their ability to model uncertainties and perform better when predictions are retained according to the uncertainties, which further verifies the generality of the Adversarial Sampling approach.

Introduction Video

Sessions where this paper appears

-

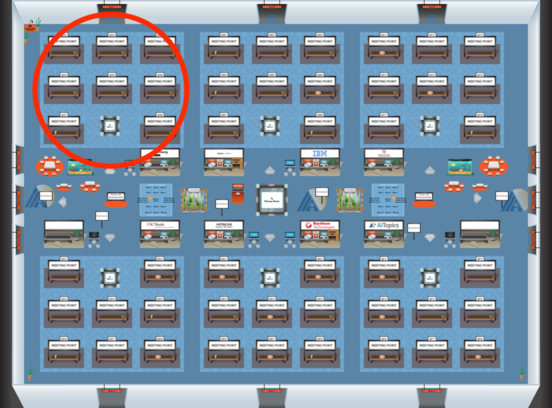

Poster Session 5

Sat, February 26 12:45 AM - 2:30 AM (+00:00)

Sat, February 26 12:45 AM - 2:30 AM (+00:00)

Blue 1

Blue 1

-

Poster Session 10

Sun, February 27 4:45 PM - 6:30 PM (+00:00)

Sun, February 27 4:45 PM - 6:30 PM (+00:00)

Blue 1

Blue 1