Efficient Optimal Transport Algorithm by Accelerated Gradient Descent

Dongsheng An, Na Lei, Xiaoyin Xu, Xianfeng Gu

[AAAI-22] Main Track

Abstract:

Optimal transport (OT) plays an essential role in various areas like machine learning and deep learning.

However, computing discrete optimal transport plan for large scale problems with adequate accuracy and efficiency is still highly challenging.

Recently, methods based on the Sinkhorn algorithm add an entropy regularizer to the prime problem and get a trade off between efficiency and accuracy.

In this paper, we propose a novel algorithm to further improve the efficiency and accuracy based on Nesterov's smoothing technique.

Basically, the non-smooth c-transform of the Kantorovich potential is approximated by the smooth Log-Sum-Exp function, which finally smooths the original non-smooth Kantorovich dual functional. The smooth Kantorovich functional can be optimized by the fast proximal gradient algorithm (FISTA) efficiently. Theoretically, the computational complexity of the proposed method is given by $O(n^{\frac{5}{2}} \sqrt{\log n} /\epsilon)$, which is lower than

current estimation of the Sinkhorn algorithm.

Empirically, compared with the Sinkhorn algorithm, our experimental results demonstrate that the proposed method achieves faster convergence and better accuracy with the same parameter.

However, computing discrete optimal transport plan for large scale problems with adequate accuracy and efficiency is still highly challenging.

Recently, methods based on the Sinkhorn algorithm add an entropy regularizer to the prime problem and get a trade off between efficiency and accuracy.

In this paper, we propose a novel algorithm to further improve the efficiency and accuracy based on Nesterov's smoothing technique.

Basically, the non-smooth c-transform of the Kantorovich potential is approximated by the smooth Log-Sum-Exp function, which finally smooths the original non-smooth Kantorovich dual functional. The smooth Kantorovich functional can be optimized by the fast proximal gradient algorithm (FISTA) efficiently. Theoretically, the computational complexity of the proposed method is given by $O(n^{\frac{5}{2}} \sqrt{\log n} /\epsilon)$, which is lower than

current estimation of the Sinkhorn algorithm.

Empirically, compared with the Sinkhorn algorithm, our experimental results demonstrate that the proposed method achieves faster convergence and better accuracy with the same parameter.

Introduction Video

Sessions where this paper appears

-

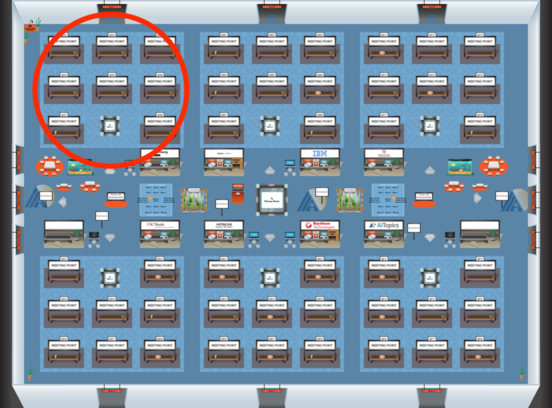

Poster Session 5

Sat, February 26 12:45 AM - 2:30 AM (+00:00)

Sat, February 26 12:45 AM - 2:30 AM (+00:00)

Blue 1

Blue 1

-

Poster Session 10

Sun, February 27 4:45 PM - 6:30 PM (+00:00)

Sun, February 27 4:45 PM - 6:30 PM (+00:00)

Blue 1

Blue 1