Abstract:

Maximum Inner Product Search (MIPS) plays an important role in many applications ranging from information retrieval, recommender systems to natural language processing and machine learning. However, exhaustive MIPS is often expensive and impractical when there are a large number of candidate items. The state-of-the-art approximated MIPS is product quantization with a score-aware loss, which weighs more heavily on items with larger inner product scores. However, it is challenging to extend the score-aware loss for additive quantization due to parallel-orthogonal decomposition of residual error. Learning additive quantization with respect to this loss is important since additive quantization can achieve a lower approximation error than product quantization. To this end, we propose a quantization method called Anisotropic Additive Quantization to combine the score-aware anisotropic loss and additive quantization. To efficiently update the codebooks in this algorithm, we develop a new alternating optimization algorithm. The proposed algorithm is extensively evaluated on three real-world datasets. The experimental results show that it outperforms the state-of-the-art baselines with respect to approximate search accuracy while guaranteeing a similar retrieval efficiency.

Introduction Video

Sessions where this paper appears

-

Poster Session 6

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

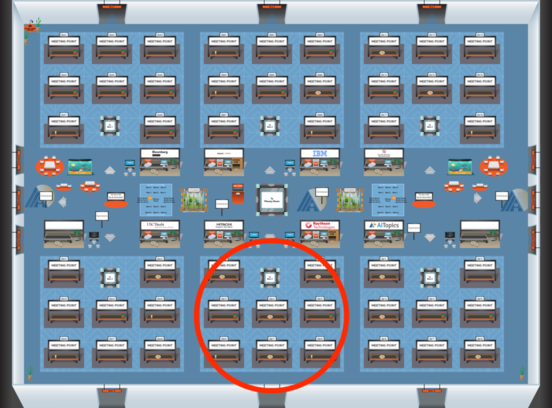

Blue 5

Blue 5

-

Poster Session 12

Mon, February 28 8:45 AM - 10:30 AM (+00:00)

Mon, February 28 8:45 AM - 10:30 AM (+00:00)

Blue 5

Blue 5