Shrinking Temporal Attention in Transformers for Video Action Recognition

Bonan Li, Pengfei Xiong, Congying Han, Tiande Guo

[AAAI-22] Main Track

Abstract:

Spatiotemporal modeling in an unified architecture is key for video action recognition. This paper proposes a Shrinking Temporal Attention Transformer (STAT), which efficiently builts spatiotemporal attention maps considering the attenuation of spatial attention in short and long temporal sequences. Specifically, for short-term temporal tokens, query token interacts with them in a fine-grained manner in dealing with short-range motion. It then shrinks to a coarse attention in neighborhood for long-term tokens, to provide larger receptive field for long-range spatial aggregation. Both of them are composed in a short-long temporal integrated block to build visual appearances and temporal structure concurrently with lower costly in computation. We conduct thorough ablation studies, and achieve state-of-the-art results on multiple action recognition benchmarks including Kinetics400 and Something-Something v2, outperforming prior methods with 50% less FLOPs and without any pretrained model.

Introduction Video

Sessions where this paper appears

-

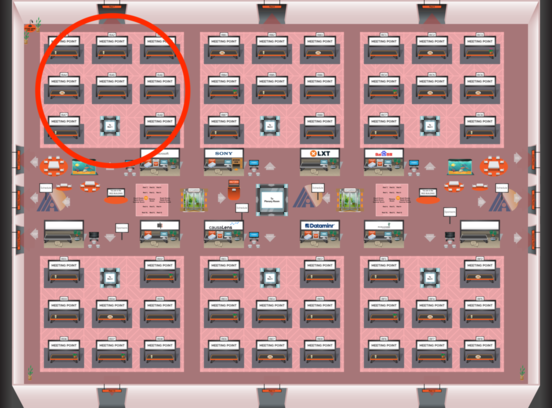

Poster Session 1

Thu, February 24 4:45 PM - 6:30 PM (+00:00)

Thu, February 24 4:45 PM - 6:30 PM (+00:00)

Red 1

Red 1

-

Poster Session 11

Mon, February 28 12:45 AM - 2:30 AM (+00:00)

Mon, February 28 12:45 AM - 2:30 AM (+00:00)

Red 1

Red 1