Un-Mix: Rethinking Image Mixtures for Unsupervised Visual Representation Learning

Zhiqiang Shen, Zechun Liu, Zhuang Liu, Marios Savvides, Trevor Darrell, Eric Xing

[AAAI-22] Main Track

Abstract:

The recently advanced unsupervised learning approaches use the siamese-like framework to compare two "views" from the same image for learning representations. Making the two views distinctive is a core to guarantee that unsupervised methods can learn meaningful information. However, such frameworks are sometimes fragile on overfitting if the augmentations used for generating two views are not strong enough, causing the over-confident issue on the training data. This drawback hinders the model from learning subtle variance and fine-grained information. To address this, in this work we aim to involve the distance concept on label space in the unsupervised learning and let the model be aware of the degree of similarity between positive or negative pairs through mixing the input space, to further work collaboratively for the input and loss spaces. Despite its conceptual simplicity, we show empirically that with the solution -- Unsupervised image mixtures (Un-Mix), we can learn more robust and generalized representations from the transformed input and corresponding new label space. Extensive experiments are conducted on CIFAR-10, CIFAR-100, STL-10, Tiny ImageNet and standard ImageNet with popular unsupervised methods SimCLR, BYOL, MoCo V1&V2, SwAV, etc. Our proposed image mixture and label assignment strategy can obtain consistent improvement by 1~3% following exactly the same hyperparameters and training procedures of the base methods. Code is publicly available at https://github.com/szq0214/Un-Mix.

Introduction Video

Sessions where this paper appears

-

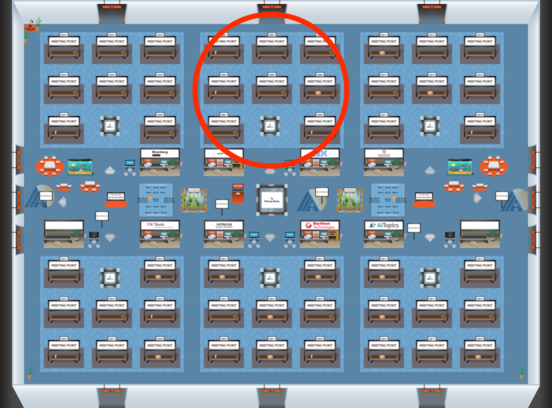

Poster Session 6

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

Blue 2

Blue 2

-

Poster Session 12

Mon, February 28 8:45 AM - 10:30 AM (+00:00)

Mon, February 28 8:45 AM - 10:30 AM (+00:00)

Blue 2

Blue 2