Adaptive Orthogonal Projection for Continual Learning

Yiduo Guo, Wenpeng Hu, Dongyan Zhao, Bing Liu

[AAAI-22] Main Track

Abstract:

Catastrophic forgetting is a key obstacle to continual learning. One of the state-of-the-art approaches is orthogonal projection. The idea of this approach is to learn each task by updating the network parameters or weights only in the direction orthogonal to the subspace spanned by all previous task inputs. This ensures no interference with tasks that have been learned. Despite the fact that this approach is quite recent, its system OWM (Orthogonal Weights Modification) performs very well compared to other state-of-the-art systems. In this paper, we first discuss an issue that we have discovered in the mathematical derivation of this approach and then propose a novel method, called AOP (Adaptive Orthogonal Projection), to resolve it, which results in significant accuracy gains in empirical evaluations in both the batch and online continual learning settings without saving any previous training data as in replay-based methods.

Introduction Video

Sessions where this paper appears

-

Poster Session 4

Fri, February 25 5:00 PM - 6:45 PM (+00:00)

Fri, February 25 5:00 PM - 6:45 PM (+00:00)

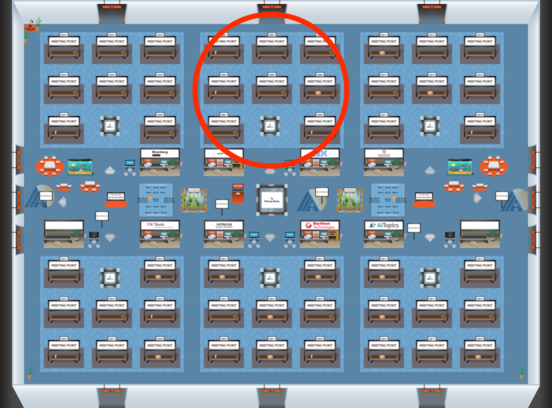

Blue 2

Blue 2

-

Poster Session 7

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Blue 2

Blue 2

-

Oral Session 7

Sat, February 26 6:30 PM - 7:45 PM (+00:00)

Sat, February 26 6:30 PM - 7:45 PM (+00:00)

Blue 2

Blue 2