Contact-Distil: Boosting Low Homologous Protein Contact Map Prediction by Self-Supervised Distillation

Qin Wang, Jiayang Chen, Yuzhe Zhou, Yu Li, Liangzhen Zheng, Sheng Wang, Zhen Li, Shuguang Cui

[AAAI-22] Main Track

Abstract:

Accurate protein contact map prediction (PCMP) is essential for precise protein structure estimation and further biological studies. Recent works achieve significant performance on this task with high quality multiple sequence alignment (MSA). However, the PCMP accuracy drops dramatically while only poor MSA (e.g., absolute MSA count less than 10) is available. Therefore, in this paper, we propose the Contact-Distil to improve the low homologous PCMP accuracy through knowledge distillation on a self-supervised model. Particularly, two pre-trained transformers are exploited to learn the high quality and low quality MSA representation in parallel for the teacher and student model correspondingly. Besides, the co-evolution information is further extracted from pure sequence through a pretrained ESM-1b model, which provides auxiliary knowledge to improve student performance. Extensive experiments show Contact-Distil outperforms previous state-of-the-arts by large margins on CAMEO-L dataset for low homologous PCMP, i.e., around 13.3% and 9.5% improvements against Alphafold2 and MSA Transformer respectively when MSA count less than 10.

Introduction Video

Sessions where this paper appears

-

Poster Session 1

Thu, February 24 4:45 PM - 6:30 PM (+00:00)

Thu, February 24 4:45 PM - 6:30 PM (+00:00)

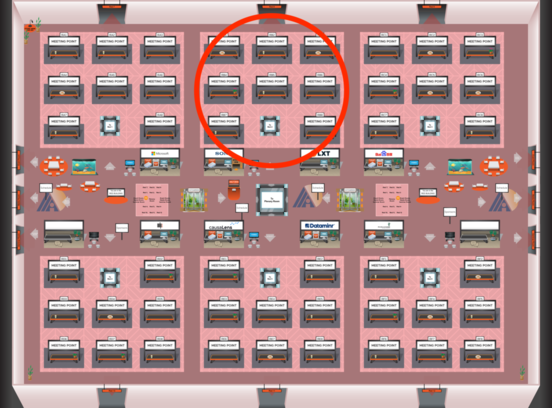

Red 2

Red 2

-

Poster Session 10

Sun, February 27 4:45 PM - 6:30 PM (+00:00)

Sun, February 27 4:45 PM - 6:30 PM (+00:00)

Red 2

Red 2