Bridging LTLf Inference to GNN Inference for Learning LTLf Formulae

Weilin Luo, Hai Wan, Pingjia Liang, Jianfeng Du, Bo Peng, Delong Zhang

[AAAI-22] Main Track

Abstract:

Learning linear temporal logic on finite traces (LTLf ) formulae aims to learn a target formula that characterizes the highlevel behavior of a system from observation traces in planning. Existing approaches to learning LTLf formulae, however, can hardly learn accurate LTLf formulae from noisy data. It is challenging to design an efficient search mechanism in the large search space in form of arbitrary LTLf formulae while alleviating the wrong search bias resulting from noisy data. In this paper, we tackle this problem by bridging LTLf inference to GNN inference. Our key theoretical contribution is showing that GNN inference can simulate LTLf inference to distinguish traces. Based on our theoretical result, we design a GNN-based approach, GLTLf, which combines GNN inference and parameter interpretation to seek the target formula in the large search space. Thanks to the non-deterministic learning process of GNNs, GLTLf is able to cope with noise. We evaluate GLTLf on various datasets with noise. Our experimental results confirm the effectiveness of GNN inference in learning LTLf formulae and show that GLTLf is superior to the state-of-the-art approaches.

Introduction Video

Sessions where this paper appears

-

Poster Session 2

Fri, February 25 12:45 AM - 2:30 AM (+00:00)

Fri, February 25 12:45 AM - 2:30 AM (+00:00)

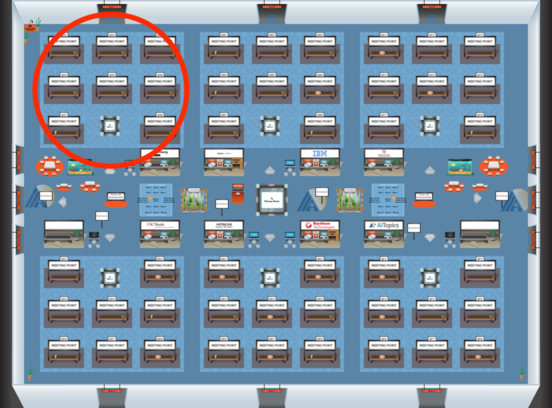

Blue 1

Blue 1

-

Poster Session 9

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Blue 1

Blue 1