SmartIdx: Reducing Communication Cost in Federated Learning by Exploiting the CNNs Structures

Donglei Wu, Xiangyu Zou, Shuyu Zhang, Haoyu Jin, Wen Xia, Binxing Fang

[AAAI-22] Main Track

Abstract:

Top-k sparsification method is popular and powerful forreducing the communication cost in Federated Learning(FL). However, according to our experimental observation, it spends most of the total communication cost on the index of the selected parameters (i.e., their position informa-tion), which is inefficient for FL training. To solve this problem, we propose a FL compression algorithm for convolution neural networks (CNNs), called SmartIdx, by extending the traditional Top-k largest variation selection strategy intothe convolution-kernel-based selection, to reduce the proportion of the index in the overall communication cost and thusachieve a high compression ratio. The basic idea of SmartIdx is to improve the 1:1 proportion relationship betweenthe value and index of the parameters to n:1, by regarding the convolution kernel as the basic selecting unit in parameter selection, which can potentially deliver more informationto the parameter server under the limited network traffic. Tothis end, a set of rules are designed for judging which kernel should be selected and the corresponding packaging strategies are also proposed for further improving the compressionratio. Experiments on mainstream CNNs and datasets showthat our proposed SmartIdx performs2.5×−69.2×higher compression ratio than the state-of-the-art FL compression algorithms without degrading model performance.

Introduction Video

Sessions where this paper appears

-

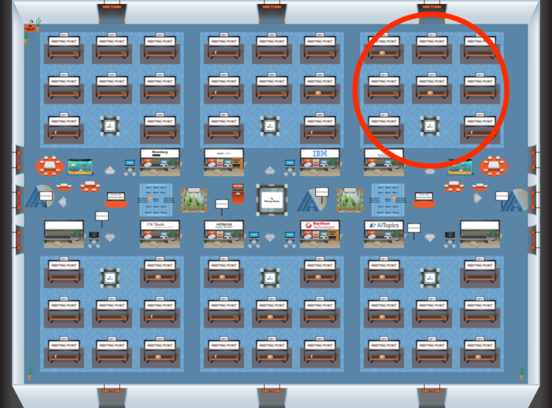

Poster Session 3

Fri, February 25 8:45 AM - 10:30 AM (+00:00)

Fri, February 25 8:45 AM - 10:30 AM (+00:00)

Blue 3

Blue 3

-

Poster Session 8

Sun, February 27 12:45 AM - 2:30 AM (+00:00)

Sun, February 27 12:45 AM - 2:30 AM (+00:00)

Blue 3

Blue 3