Synthesis from Satisficing and Temporal Goals

Suguman Bansal, Lydia Kavraki, Moshe Y. Vardi, Andrew Wells

[AAAI-22] Main Track

Abstract:

Reactive synthesis from high-level specifications that combine {\em hard} constraints expressed in Linear Temporal Logic ($\ltl$) with {\em soft} constraints expressed by discounted-sum (DS) rewards has applications in planning and reinforcement learning. An existing approach combines techniques from $\ltl$ synthesis with optimization for the DS rewards but has failed to yield a sound algorithm. An alternative approach combining $\ltl$ synthesis with satisficing DS rewards (rewards that achieve a threshold) is sound and complete for integer discount factors, but, in practice, a fractional discount factor is desired. This work extends the existing satisficing approach, presenting the first sound algorithm for synthesis from $\ltl$ and DS rewards with fractional discount factors. The utility of our algorithm is demonstrated on robotic planning domains.

Introduction Video

Sessions where this paper appears

-

Poster Session 2

Fri, February 25 12:45 AM - 2:30 AM (+00:00)

Fri, February 25 12:45 AM - 2:30 AM (+00:00)

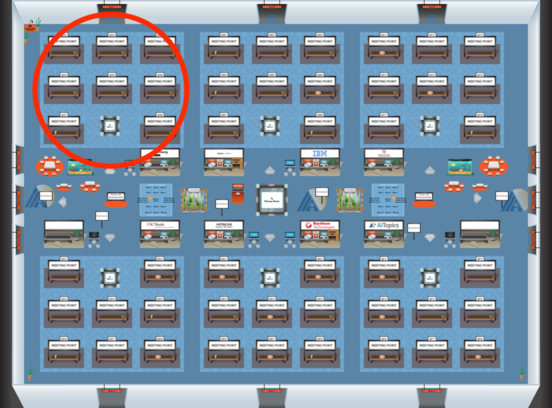

Blue 1

Blue 1

-

Poster Session 9

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Blue 1

Blue 1

-

Oral Session 2

Fri, February 25 2:30 AM - 3:45 AM (+00:00)

Fri, February 25 2:30 AM - 3:45 AM (+00:00)

Blue 1

Blue 1