Abstract:

Federated learning (FL) performs the global model updating in a synchronous manner in that the FL server waits for a specific number of local models from distributed devices before computing and sharing a new global model. We propose asynchronous federated learning (AsyncFL), which allows each client to continuously upload its model based on its capabilities and the FL server to determine when to asynchronously update and broadcast the global model. The asynchronous model aggregation at the FL server is performed by the Boyer–Moore majority voting algorithm for the k-bit quantized weight values. The proposed FL can speed up the convergence of the global model learning early in the FL process and reduce data exchange once the model is converged.

Sessions where this paper appears

-

Poster Session 6

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

Sat, February 26 8:45 AM - 10:30 AM (+00:00)

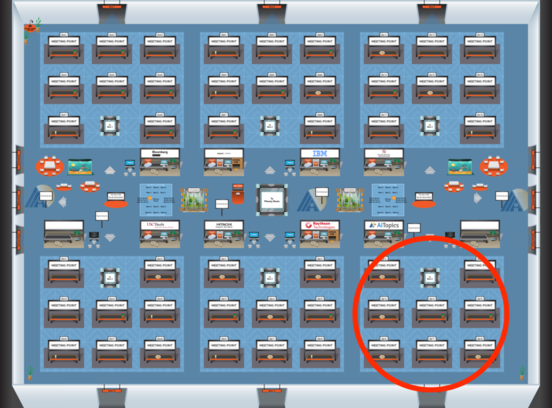

Blue 6

Blue 6

-

Poster Session 10

Sun, February 27 4:45 PM - 6:30 PM (+00:00)

Sun, February 27 4:45 PM - 6:30 PM (+00:00)

Blue 6

Blue 6