How to Reduce Action Space for Planning Domains? (Student Abstract)

Harsha Kokel, Junkyu Lee, Michael Katz, Shirin Sohrabi, Kavitha Srinivas

[AAAI-22] Student Abstract and Poster Program - FINALIST

Abstract:

While AI planning and Reinforcement Learning (RL) solve sequential decision-making problems, they are based on different formalisms, which leads to a significant difference in their action spaces. When solving planning problems using RL algorithms, we have observed that a naive translation of the planning action space incurs severe degradation in sample complexity. In practice, those action spaces

are often engineered manually in a domain-specific manner. In this abstract, we present a method that reduces the parameters of operators in AI planning domains by introducing a parameter seed set problem and casting it as a classical planning task. Our experiment shows that our proposed method significantly reduces the number of actions in the RL environments originating from AI planning domains.

are often engineered manually in a domain-specific manner. In this abstract, we present a method that reduces the parameters of operators in AI planning domains by introducing a parameter seed set problem and casting it as a classical planning task. Our experiment shows that our proposed method significantly reduces the number of actions in the RL environments originating from AI planning domains.

Introduction Video

Sessions where this paper appears

-

Poster Session 3

Fri, February 25 8:45 AM - 10:30 AM (+00:00)

Fri, February 25 8:45 AM - 10:30 AM (+00:00)

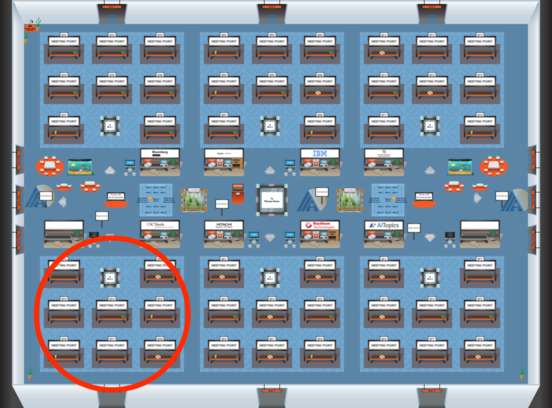

Blue 4

Blue 4

-

Poster Session 7

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Sat, February 26 4:45 PM - 6:30 PM (+00:00)

Blue 4

Blue 4