A Stochastic Momentum Accelerated Quasi-Newton Method for Neural Networks (Student Abstract)

Indrapriyadarsini Sendilkkumaar, Shahrzad Mahboubi, Hiroshi Ninomiya, Takeshi Kamio, Hideki Asai

[AAAI-22] Student Abstract and Poster Program

Abstract:

Incorporating curvature information in stochastic methods has been a challenging task. This paper proposes a momentum accelerated BFGS quasi-Newton method in both its full and limited memory forms, for solving stochastic large scale non-convex optimization problems in neural networks (NN).

Sessions where this paper appears

-

Poster Session 2

Fri, February 25 12:45 AM - 2:30 AM (+00:00)

Fri, February 25 12:45 AM - 2:30 AM (+00:00)

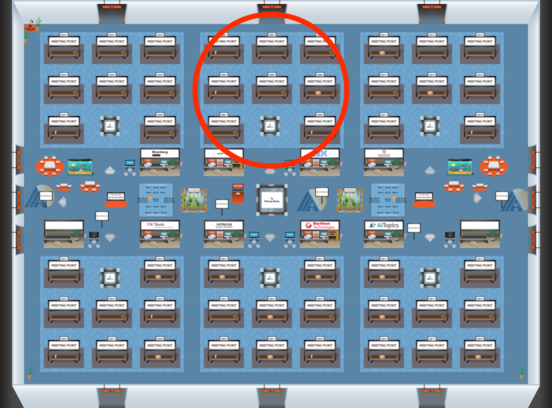

Blue 2

Blue 2

-

Poster Session 9

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Sun, February 27 8:45 AM - 10:30 AM (+00:00)

Blue 2

Blue 2